publications

publications by categories in reversed chronological order. generated by jekyll-scholar.

2025

-

Facial Microscopic Structures Synthesis from a Single Unconstrained ImageYouyang Du, Lu Wang, and Beibei WangIn Proceedings of the SIGGRAPH Conference Papers ’25, , 2025

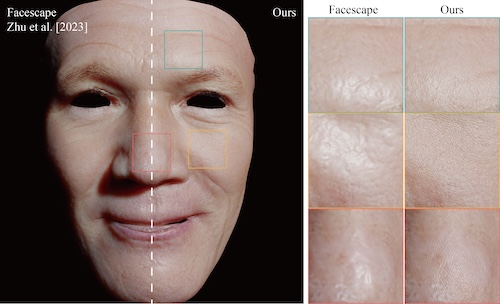

Facial Microscopic Structures Synthesis from a Single Unconstrained ImageYouyang Du, Lu Wang, and Beibei WangIn Proceedings of the SIGGRAPH Conference Papers ’25, , 2025Obtaining 3D faces with microscopic structures from a single unconstrained image is challenging. The complexities of wrinkles and pores at a microscopic level, coupled with the blurriness of the input image, raise the difficulty. However, the distribution of wrinkles and pores tends to follow a specialized pattern, which can provide a strong prior for synthesizing them. Therefore, a key to microstructure synthesis is a parametric wrinkles and pore model with controllable semantic parameters. Additionally, ensuring differentiability is essential for enabling optimization through gradient descent methods. To this end, we propose a novel framework designed to reconstruct facial micro-wrinkles and pores from naturally captured images efficiently. At the core of our framework is a differentiable representation of wrinkles and pores via a graph neural network (GNN), which can simulate the complex interactions between adjacent wrinkles by multiple graph convolutions. Furthermore, to overcome the problem of inconsistency between the blurry input and clear wrinkles during optimization, we proposed a Direction Distribution Similarity that ensures that the wrinkle-directional features remain consistent. Consequently, our framework can synthesize facial micro-structures from a blurry skin image patch, which is cropped from a natural-captured facial image, in around an average of 2 seconds. Our framework can seamlessly integrate with existing macroscopic facial detail reconstruction methods to enhance their detailed appearance. We showcase this capability on several works, including DECA, HRN, and FaceScape.

-

Diffusion-Guided Relighting for Single-Image SVBRDF EstimationIn Proceedings of the SIGGRAPH Asia Conference Papers ’25, 2025

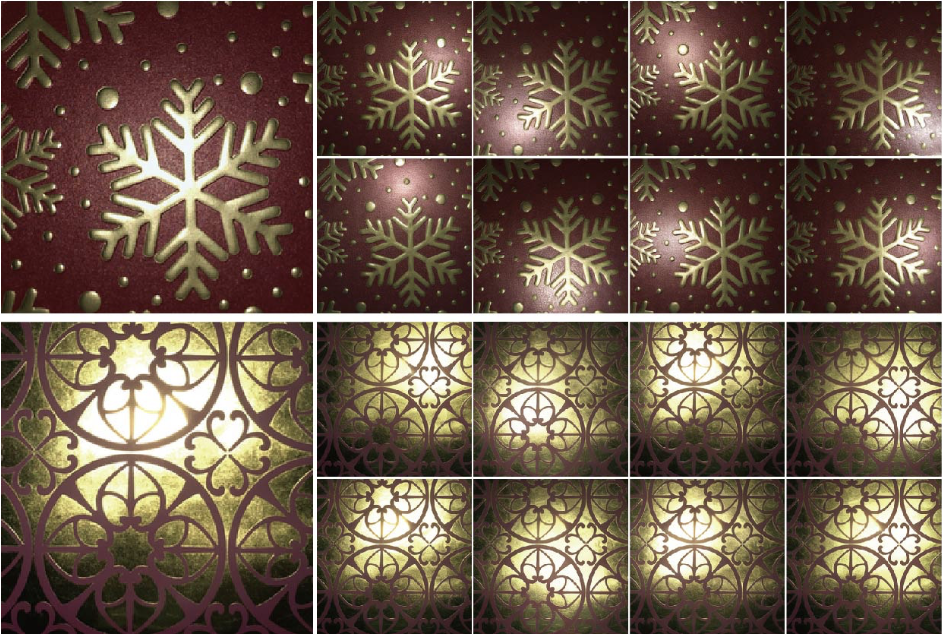

Diffusion-Guided Relighting for Single-Image SVBRDF EstimationIn Proceedings of the SIGGRAPH Asia Conference Papers ’25, 2025Recovering high-fidelity spatially varying bidirectional reflectance distribution function (SVBRDF) maps from a single image remains an ill-posed and challenging problem, especially in the presence of saturated highlights. Existing methods often fail to reconstruct the underlying texture in regions overwhelmed by intense specular reflections. This kind of bake-in artifacts caused by highlight corruption can be greatly alleviated by providing a series of material images under different lighting conditions. To this end, our key insight is to leverage the strong priors of diffusion models to generate images of the same material under varying lighting conditions. These generated images are then used to aid a multi-image SVBRDF estimator in recovering highlight-free reflectance maps. However, strong highlights in the input image lead to inconsistencies across the relighting results. Moreover, texture reconstruction becomes unstable in saturated regions, with variations in background structure, specular shape, and overall material color. These artifacts degrade the quality of SVBRDF recovery. To address this issue, we propose a shuffle-based background consistency module that extracts stable background features and implicitly identifies saturated regions. This guides the diffusion model to generate coherent content while preserving material structures and details. Furthermore, to stabilize the appearance of generated highlights, we introduce a lightweight specular prior encoder that estimates highlight features and then performs grid-based latent feature translation, injecting consistent specular contour priors while preserving material color fidelity. Both quantitative analysis and qualitative visualization demonstrate that our method enables stable neural relighting from a single image and can be seamlessly integrated into multi-input SVBRDF networks to estimate highlight-free reflectance maps.